Monitoring the maximum latency for a scrape period with prometheus

Ever since I watched (several times) Gil Tene's talk about how we are measuring latency wrong I set myself to try get such values in a graph. His thesis is that we are using histograms and other similar tools for measuring percentiles of our response times and that that is wrong because we never see the maximum time; our tools are hiding them behind averages. What can we do about it with our current tools, in particular Prometheus and Grafana? Let's first define exactly what we want:

On one side we have a stream of log lines. These lines will be parsed and some values will be extracted from them. On the other side we have Prometheus trying to scrape a representative of those values. The representative that we want for every scrape is the max of all the values collected in that period between scrapes. For the next scraping period, we must forget this max and calculate the new one, and so on for each scrape interval.

Prometheus offers us three different metric types that could allow us to get such value. The first and obvious one would be a gauge, but there is no tool that does what I described in the previous paragraph.

The second one would be a histogram. For a histogram, we define a set of values that become boundaries. For each interval defined by those boundaries, the histogram gives us the amount of values that fall in them, and also below the lowest boundary and above the highest one. This is not enough because we can never have even a ballpark of what's the maximum value is, much less it's actual value. The max is lost among all the values that fall in the interval above the las boundary we define; we only know which interval contains the max. To at least get a ballpark you need to figure out an upper boundary, otherwise the max could be anywhere between the highest boundary and infinite. Maybe you're lucky and you have something like a timeout, but it's not often that a timeout fires during active data transmissions. On the other hand, if you're measuring transfer times for a service that returns files, you don't have an upper bound of how long it can take because it depends on the whole network path and the size of the file.

The last one is summaries. Summaries work slightly different. Instead of partitioning in terms of values, it partitions in terms of percentiles. One aspect of summaries is that you cannot aggregate them. Not only Prometheus' documentation mentions this, it also cites one of Gil Tene's articles about it1. But one of the peculiarities of percentiles is that if you take the 0 percentile, you get the minimum, and if you take the 100 percentile, you get the maximum. And as Gil Tene mentions in that same article, those are the only two values that you can aggregate because if you have all the minimums, the global minimum is just the minimum of all those values, and similarly for the maximum.

Now, how do we actually implement it? I had mentioned before, we have two tools for converting log lines into metrics

for Prometheus. One is mtail, and the other one is the Grok exporter. mtail doesn't seem to have any support for

summaries, so we are only left with the Grok exporter.

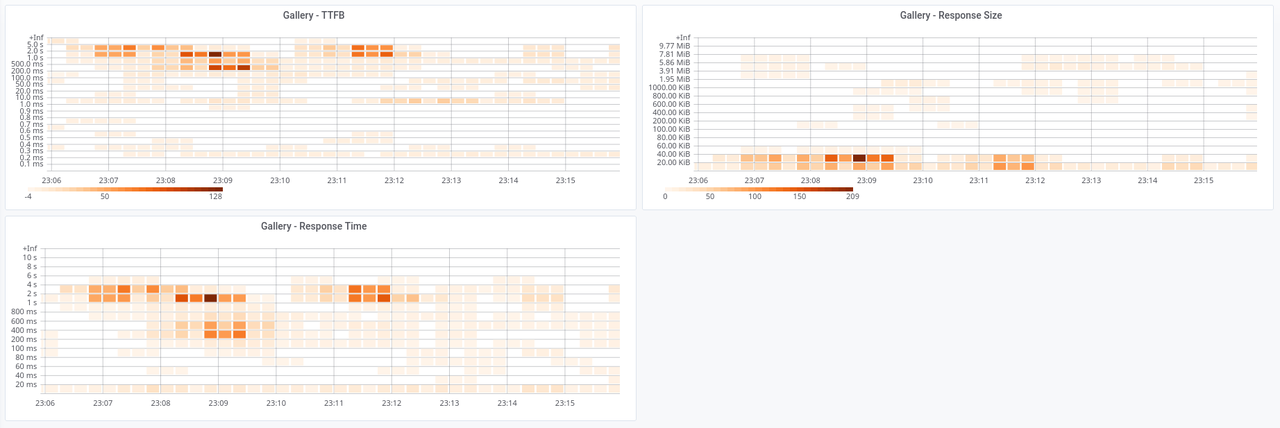

I tried to set up a Grok exporter configuration to do exactly that, only the minimum and the maximum for a given value, which in my case was time to first byte (TTFB), because I'm trying to apply it to a service that gives files as responses. The total latency of such service depends on the size of the file, but TTFB should not, so I'm interested in that.

I want the minimum and the maximum, so I set up a metric for that. I define it as summary, and I set it up for the zero and a hundred percentiles. And this is where the problem starts getting slightly more complicated.

First, the Grok exporter uses a library for computing the percentiles. That library does not accept just an array of the percentiles you are interested in; quoting the docs:

grok_exporter does not provide exact values for the quantiles, but only estimations. For each quantile, you also specify an uncertainty that is tolerated for the estimation

I don't know how much that impacts the two values I'm looking for, minimum and maximum, but I started with a 1% tolerance just to try:

- type: summary name: elevation_tile_ttfb_extremes help: Minimum and Maximum for TTFB match: '%{ELEVATION_LOG}' value: '{{multiply .first_byte 0.001}}' # µs to ms quantiles: { 0: 0.01, 100: 0.01 } # min, max max_age: 15s

And it didn't work.

First, it started complaining that when it was calculating some values, it was trying to convert the string that it got from the log lines to an integer, but there were empty strings and basically it was failing:

grok-exporter[1402204]: WARNING: skipping log line: error processing metric elevation_tile_ttfb: unexpected error while evaluating template for label __value__: template: __value__:1:2: executing "__value__" at <multiply .first_byte 0.001>: error calling multiply: error executing multiply function: cannot convert to floating point number: strconv.ParseFloat: parsing "": invalid syntax

(I had to split it into several lines so you don't have to scroll horizontally [so much]. I'm not going to comment on whether Golang's tendency to wrap errors to the point, shown above, where the chain of error messages becomes a type of horizontal stack trace, is a good thing or not).

Once I fixed that, instead I got a traceback that points to an index error inside that library.

2024/03/10 13:42:33 http: panic serving 127.0.0.1:49912: runtime error: index out of range [99] with length 1 goroutine 39 [running]: net/http.(*conn).serve.func1(0xc000420000) /usr/local/go/src/net/http/server.go:1801 +0x147 panic(0xb7d0e0, 0xc000038060) /usr/local/go/src/runtime/panic.go:975 +0x3e9 github.com/fstab/grok_exporter/vendor/github.com/beorn7/perks/quantile.(*Stream).Query(0xc00045cd20, 0x4059000000000000, 0x4051e3d70a3d70a4) /go/src/github.com/fstab/grok_exporter/vendor/github.com/beorn7/perks/quantile/stream.go:165 +0x16f github.com/fstab/grok_exporter/vendor/github.com/prometheus/client_golang/prometheus.(*summary).Write(0xc000144c00, 0xc000175b20, 0x3, 0xc00023f412) /go/src/github.com/fstab/grok_exporter/vendor/github.com/prometheus/client_golang/prometheus/summary.go:310 +0x3c7 github.com/fstab/grok_exporter/vendor/github.com/prometheus/client_golang/prometheus.processMetric(0xca4840, 0xc000144c00, 0xc00023f5c0, 0xc00023f5f0, 0x0, 0x0, 0x0) /go/src/github.com/fstab/grok_exporter/vendor/github.com/prometheus/client_golang/prometheus/registry.go:596 +0xa2 github.com/fstab/grok_exporter/vendor/github.com/prometheus/client_golang/prometheus.(*Registry).Gather(0xc000100410, 0x0, 0x0, 0x0, 0x0, 0x0) /go/src/github.com/fstab/grok_exporter/vendor/github.com/prometheus/client_golang/prometheus/registry.go:490 +0x8ca github.com/fstab/grok_exporter/vendor/github.com/prometheus/client_golang/prometheus/promhttp.HandlerFor.func1(0x7fe9cd2298f8, 0xc00050e280, 0xc00022e300) /go/src/github.com/fstab/grok_exporter/vendor/github.com/prometheus/client_golang/prometheus/promhttp/http.go:126 +0x99 net/http.HandlerFunc.ServeHTTP(0xc00017a230, 0x7fe9cd2298f8, 0xc00050e280, 0xc00022e300) /usr/local/go/src/net/http/server.go:2042 +0x44 github.com/fstab/grok_exporter/vendor/github.com/prometheus/client_golang/prometheus/promhttp.InstrumentHandlerInFlight.func1(0x7fe9cd2298f8, 0xc00050e280, 0xc00022e300) /go/src/github.com/fstab/grok_exporter/vendor/github.com/prometheus/client_golang/prometheus/promhttp/instrument_server.go:40 +0xab net/http.HandlerFunc.ServeHTTP(0xc00050d170, 0x7fe9cd2298f8, 0xc00050e280, 0xc00022e300) /usr/local/go/src/net/http/server.go:2042 +0x44 github.com/fstab/grok_exporter/vendor/github.com/prometheus/client_golang/prometheus/promhttp.InstrumentHandlerCounter.func1(0xca9840, 0xc00024a000, 0xc00022e300) /go/src/github.com/fstab/grok_exporter/vendor/github.com/prometheus/client_golang/prometheus/promhttp/instrument_server.go:100 +0xda net/http.HandlerFunc.ServeHTTP(0xc00050d260, 0xca9840, 0xc00024a000, 0xc00022e300) /usr/local/go/src/net/http/server.go:2042 +0x44 net/http.(*ServeMux).ServeHTTP(0x131bd20, 0xca9840, 0xc00024a000, 0xc00022e300) /usr/local/go/src/net/http/server.go:2417 +0x1ad net/http.serverHandler.ServeHTTP(0xc000196000, 0xca9840, 0xc00024a000, 0xc00022e300) /usr/local/go/src/net/http/server.go:2843 +0xa3 net/http.(*conn).serve(0xc000420000, 0xcac140, 0xc000244000) /usr/local/go/src/net/http/server.go:1925 +0x8ad created by net/http.(*Server).Serve /usr/local/go/src/net/http/server.go:2969 +0x36c

In my last post about this, I mentioned that the Grok exporter has been unsupported for four years, nobody has written any single line of code after that, and there are many open issues and even several requests still open and nothing has happened in the last four years. And this library, the one that calculates the percentiles, is even worse. It's been five years since they last updated it, which means that there's no chance that even if I report the bug, I will get releases of both that library and then the Grok exporter to fix it. And I don't feel like reading a whole paper in statistics at this point of my life just to solve this problem2.

So what options do we have? Well, I think there's only one, and it's writing your own tool that fulfills the description in the second paragraph. I'm not really sure I have the energy to do it, but I think this will keep jumping around my head and I will succumb and do it, mostly because I think it's important.

But until that moment occurs, I have no solution for this problem, and that means that in the meantime I will have to live with histograms.

-

It's actually interesting that Prometheus people are aware of Gil Tene's ideas and yet don't have a solution for them. ↩

-

I remember being a frevient free software advocate for reasons exactly like this. But then, I don't have the energy nor I think I can convince my manager to get someone paid to fix it. ↩